#AI made huge progress during spring and summer 2022. there are a handful portals based on big scale training of neural networks and then offering a text to image workflow. in the example(s) above the same input prompt was used:

“A detailed portrait of Queen Elisabeth II and Charles III. Painted by a Ming Dynasty painter. Highly detailed dress and suit. A crown of ketchup for both.”

please keep in mind that the AI “diffusion” process offers an unlimited amount of outputs for our simple text. for a start, DALL E2 offered four variations, as did midJourney.

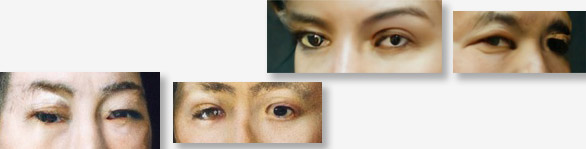

i find the simularities striking, because often the two systems create totally different scenarios. both respect the aesthetics of the ming dynasty (1368 to 1644). both mix the “real” queen and king faces with possible chinese counterparts. we see detailed structures of gold, red and black in the couple’s outfit. both systems have improved as to eyes and hands.

there are still minor irritations in the eyes. DALL E2 does a good job with one hand, but not with the right hand of the queen. midJourney shows no hands at all, only glimpses of misplaced fingers of the queen. midJourney seems to not have learnt the concept of sleeves and leaves them empty. and it does not know anything about mother and son. probably because charles became king just recently, and there were not enough training data available.

both backgrounds are exellent. they not only respect my input-wish for portraits (no distracting background) but also introduce a humble blend of colours: a dark brown and a dark turquoise. both systems disrespect my wish for ketchup crowns – maybe out of respect for the royals?

thanks to michael interbartolo III who created the midJourney version with my prompt!